- Published on

Conquer the Web with a Powerful Server Squad: Building a Load-Balanced LXC/LXD Container Cluster

- Authors

- Name

- Nino Stephen

- @_ninostephen_

The Challenge

- Set up 3 containers with the web servers in different containers.

- Add an index file in each to make it different from the other.

- Set up another container which will act as a load balancer.

- Map a port from the host to the load balancer.

- Add and remove containers to see whether it still works.

Quick Introduction

The objective of this challenge is to set up 3 web servers and a load balancer in different LXC/LXD containers. A web server is an application that servers the contents when we visit sites. Web servers serve the content of a website.

For sites with less traffic, the contents are usually served from the web server itself. But what will happen if there are a lot of page visitors daily driving massive traffic to the site? Under normal circumstances, the web server will not be able to handle such requests. This is where a load balancer comes into the scene. It receives requests from its visitors and splits them up efficiently to several web servers. This way the traffic load on the server can be handled. This means that the end user will not be affected severely if one of the web servers fails. The traffic to that web server is automatically forwarded to the other ones.

So what's the role of LXC/LXD in this challenge? Well LXC stands for Linux Containers and LXD for Linux Container Daemons. What it does is, it splits up the resources of a server including Physical Memory, CPU, the OS kernel and others in such a way that each can work as an independent Operating System. The common term used for this is Virtualization. In essence, a Linux Container will work like a standalone machine. This might sound similar to a Virtual Machine. But unlike a virtual machine, which emulates the hardware and has its operating system, an LXC container splits up its resources using kernel features. The kernel of the host machine is shared among its containers. But each container will have its cgroups and namespaces and a bunch of other things.

The Solution

Create 1 container with Alpine/3.10 with the name web1. The name doesn't need to be web1.

$ lxc launch images:alpine/3.10 web1

Creating web1

Starting web1

$ lxc list

+------+---------+-----------------------+-----------------------------------------------+-----------+-----------+----------+

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS | LOCATION |

+------+---------+-----------------------+-----------------------------------------------+-----------+-----------+----------+

| web1 | RUNNING | 10.156.178.162 (eth0) | fd42:93f2:521c:69a4:216:3eff:fed2:dc46 (eth0) | CONTAINER | 0 | cicada |

+------+---------+-----------------------+-----------------------------------------------+-----------+-----------+----------+

After the container is launched, we can proceed to install Nginx on it. But by default, a launched container will be running.

$ lxc exec web1 -- ash

~ # apk update

fetch http://dl-cdn.alpinelinux.org/alpine/v3.10/main/x86_64/APKINDEX.tar.gz

fetch http://dl-cdn.alpinelinux.org/alpine/v3.10/community/x86_64/APKINDEX.tar.gz

v3.10.5-47-g8ca2c2f3ee [http://dl-cdn.alpinelinux.org/alpine/v3.10/main]

v3.10.5-48-g49428d1d52 [http://dl-cdn.alpinelinux.org/alpine/v3.10/community]

OK: 10343 distinct packages available

~ # apk add nginx

(1/2) Installing pcre (8.43-r0)

(2/2) Installing nginx (1.16.1-r2)

Executing nginx-1.16.1-r2.pre-install

Executing busybox-1.30.1-r4.trigger

OK: 10 MiB in 21 packages

Once that's completed, start setting up nginx to be used as a web server. The first thing would be making it a service that starts automatically on the server container start. This can be done using the rc-update command in the alpine. By default, all requests made to the server will be responded to with a 404 page. This is then changed to specify the document root of the site (in our case it's**/var/www/**) along with the file that should be loaded (index.html). Following that, we then start the nginx service.

~ # rc-update add nginx default

* service nginx added to runlevel default

~ # vi /etc/nginx/conf.d/default.conf

server {

listen 80 default_server;

listen [::]:80 default_server;

root /var/www/;

location / {

index index.html;

}

}

~ # /etc/init.d/nginx start

* Caching service dependencies ... [ ok ]

* /run/nginx: creating directory

* /run/nginx: correcting owner

* Starting nginx ... [ ok ]

~ # exit

As this container is to be run as a web server, an index.html file is created on the host machine and pushed into the container. The index file just contains the statement "Served from the web1 container!".

$ lxc file push index.html web1/var/www/

Using curl from the host machine we can find whether the container is responding to our requests.

$ curl 10.156.178.162

Served from the web1 container!

As the container is working as expected, we can publish the same and launch other containers using it as a base image. We are doing this as most of the configuration and setup are the same for all 4 containers.

$ lxc stop web1

$ lxc publish web1 --alias web

Instance published with fingerprint: 0ebef85c34d376405b8201ebf42a1766b1f5fb3db3211893bfc127422ede0549

$ for i in {2..4}; do lxc launch web web$i; done

Creating web2

Starting web2

Creating web3

Starting web3

Creating web4

Starting web4

Every container should now be up and running.

$ lxc start web1

$ lxc list

+------+---------+-----------------------+-----------------------------------------------+-----------+-----------+----------+

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS | LOCATION |

+------+---------+-----------------------+-----------------------------------------------+-----------+-----------+----------+

| web1 | RUNNING | 10.156.178.162 (eth0) | fd42:93f2:521c:69a4:216:3eff:fed2:dc46 (eth0) | CONTAINER | 0 | cicada |

+------+---------+-----------------------+-----------------------------------------------+-----------+-----------+----------+

| web2 | RUNNING | 10.156.178.147 (eth0) | fd42:93f2:521c:69a4:216:3eff:fe1c:6e73 (eth0) | CONTAINER | 0 | cicada |

+------+---------+-----------------------+-----------------------------------------------+-----------+-----------+----------+

| web3 | RUNNING | 10.156.178.210 (eth0) | fd42:93f2:521c:69a4:216:3eff:fe61:583c (eth0) | CONTAINER | 0 | cicada |

+------+---------+-----------------------+-----------------------------------------------+-----------+-----------+----------+

| web4 | RUNNING | 10.156.178.115 (eth0) | fd42:93f2:521c:69a4:216:3eff:feee:6f59 (eth0) | CONTAINER | 0 | cicada |

+------+---------+-----------------------+-----------------------------------------------+-----------+-----------+----------+

web1 to web3 will be the web servers and web4 will be the load balancer. To make it differentiable, edit the index files in both web2 and web3.

$ lxc file edit web2/var/www/index.html

$ lxc file edit web3/var/www/index.html

$ curl 10.156.178.162

Served from the web1 container!

$ curl 10.156.178.147

Served from the web2 container!

$ curl 10.156.178.210

Served from the web3 container!

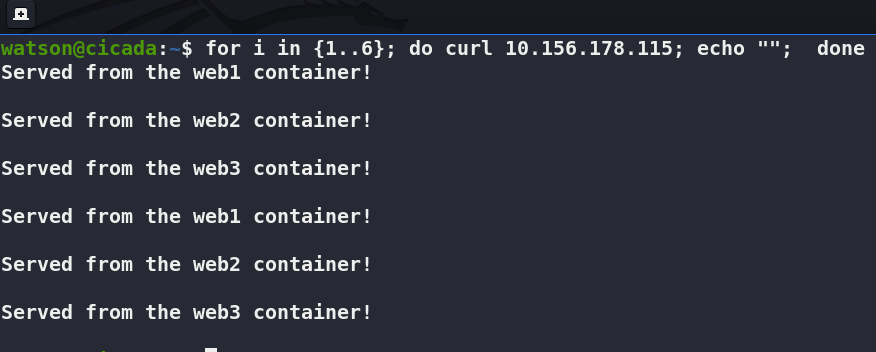

As you could see, each container is responding with a unique response to the request made to it.

We are now proceeding to set up a load balancer on web4. For that, log in to the web4 container using the exec command. This will return a shell prompt with root privileges. The main difference between this container with other containers is the small difference in nginx configuration. To make web4 act as a load balancer, we define an upstream with the name cluster. In it, we specify the servers that are in the cluster and the port to which the requests should be forwarded. Also, we specify the load balancing mechanism that should be used by the load balancer. Here it is least_conn, which will redirect requests that arrive at the load balancer to the server with the least amount of connections at the moment.

I've avoided using round-robin as it did not work when one of the servers was down and the next request was to be made to that server.

$ lxc exec web4 -- ash

~ # cd /etc/nginx/conf.d/

/etc/nginx/conf.d # vi default.conf

upstream cluster {

least_conn;

server 10.156.178.162:80;

server 10.156.178.147:80;

server 10.156.178.210:80;

}

server {

listen 80 default_server;

listen [::]:80 default_server;

root /var/www/;

location / {

proxy_pass http://cluster;

}

}

/etc/nginx/conf.d # nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

# /etc/init.d/nginx reload

* Reloading nginx configuration ...

The changes to the configuration file are then checked using the nginx -t command. This is useful as it will make sure that the entries in the configuration are correct and will inform us if there are any errors. Once the configuration file check is passed, the nginx service needs to be reloaded to use the new configuration. That should be it. The load balancer should now be working.

$ curl 10.156.178.115

Served from the web1 container!

$ curl 10.156.178.115

Served from the web2 container!

$ curl 10.156.178.115

Served from the web3 container!

$ curl 10.156.178.115

Served from the web1 container!

Turning off random containers will still work, but there will be a small delay in getting the response for the first request made to a server which is currently down. Once the server is noticed to be down, the request is then forwarded to the next server as listed in the configuration file. The subsequent request responses are at normal speed as the request to the server which is down will be skipped.

$ lxc stop web1

$ curl 10.156.178.115

Served from the web2 container!

$ curl 10.156.178.115

Served from the web3 container!

$ lxc start web1

$ lxc stop web2

$ curl 10.156.178.115

Served from the web3 container!

$ curl 10.156.178.115

Served from the web3 container!

$ curl 10.156.178.115

Served from the web1 container!

$ curl 10.156.178.115

Served from the web3 container!

The issue with the delay could have been solved by adding the health_check directive just after the proxy_pass directive in the configuration file. But this is now only available in the Nginx Plus package.

The last challenge would be mapping a host port to the load balancer port. The port forwarding/mapping can be done using iptables. Before that, we should make sure that IP forwarding is enabled in the host machine.

$ sudo sysctl net.ipv4.ip_forward

net.ipv4.ip_forward = 1

$ sudo iptables -t nat -A PREROUTING -i eth0 -p tcp -m tcp --dport 8000 -j DNAT --to 10.156.178.115:80

For this setup, port 8000 on the host port was mapped to port 80 of the load balancer container. There is a catch with using this iptables rule. we can not curl the site from the host machine. To check the working of the load-balanced site, create a simple HTTP server using python or start a netcat listener at port 8000. The requests made to the host machine from another device will be able to access the site.

Notes

Dear readers, as you might notice, this is my first article here. This is by no means the greatest of articles. This article, along with another one, was written years ago and was initially hosted on gitbook. I'm migrating it to hashnode to see how much better the engagement is and to try out all the features provided by the platform before working on publishing my main work related to Project Novlandia. The first two articles aren't a part of the Project Novlandia series.

That being said, I would appreciate all your support. Project Novlandia is an ambitious project of mine. Even though I can't give you an ETA on when the articles for that series would be published here, I would love to have you join me on that journey.

NB: As mentioned above, the articles were written years ago and young stupid me didn't cite the sources of resources I referred to while writing these articles. Sadly enough I don't remember the sources too. So, if you feel like this article refers to any part of your previously published material(s), I would like you to know that I appreciate you and acknowledge that credit is due. That being said, happy reading!